Moderate NSFW & Profanity

Learn how to use FastPix to detect NSFW and profane content in video or audio using confidence scores and the moderation API.

FastPix’s video moderation feature is designed to detect and flag harmful or inappropriate content, enabling you to maintain a safe and respectful user environment. After a file is flagged, you can take corrective actions, such as filtering the content or addressing users who create non-compliant material.

NOTE

This feature is currently in Beta.

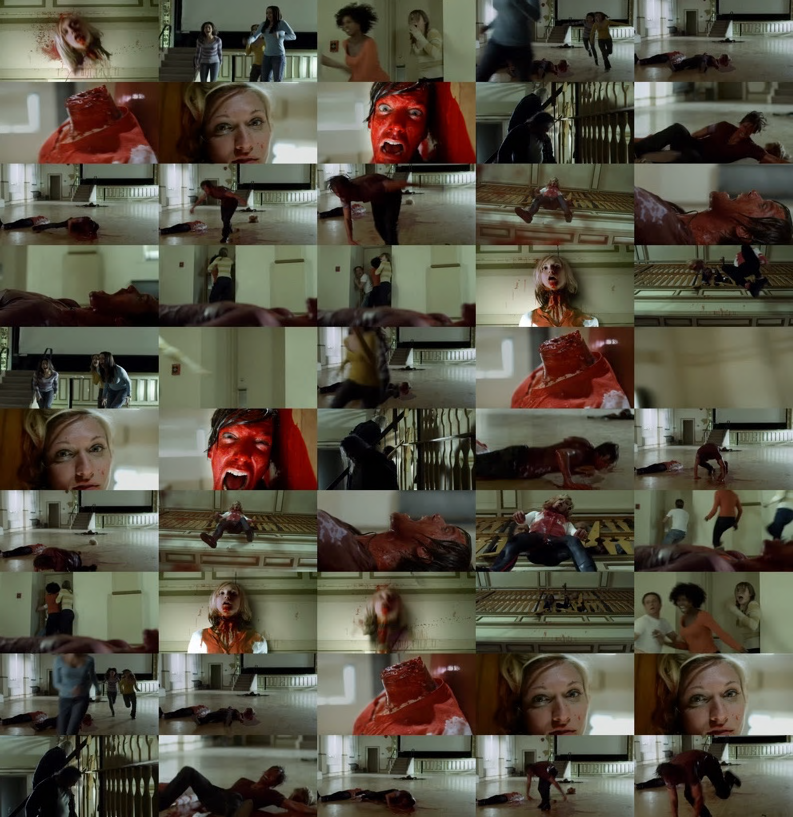

Example of NSFW Detection

We tested the NSFW filter using a sample video with diverse content. Here are the results:

- Violence: 0.94

- Graphic Violence: 0.85

- Self-Harm: 0.49

These scores range from 0 to 1, where higher scores indicate a stronger confidence in the detected category. The results demonstrate the filter's ability to detect potentially non-compliant content.

For more insights, explore our blog: AI Content Moderation Using NSFW and Profanity Filters.

Getting started with video moderation

To implement video moderation, use FastPix’s Create Media from URL or Upload Media from Device API endpoints. These endpoints analyze video or audio files for non-compliant content when uploading new media.

If you want to enable video moderation in your existing content that was previously uploaded, use the Enable video moderation API endpoint. See how.

For the full event schema and payload details of the moderation-ready event, see

video.media.ai.moderation.ready.

Steps for detecting new media

Step 1: Collect the URLs of the media files to upload to FastPix (through pull method) that you wish to analyze for moderation.

Step 2: Create a JSON request and send a POST request to the /on-demand endpoint:

In the JSON configuration for the FastPix API request, the following parameters are required:

- Type: Specify whether the media is a video or audio or av (both audio and video)

- URL: Provide the HTTPS URL of the video or audio file to be analyzed for moderation

Request body for creating new media from URL

{

"inputs": [

{

"type": "video",

"url": "https://static.fastpix.io/fp-sample-video.mp4"

}

],

"accessPolicy": "public",

"moderation": {

"type": "av"

}

} Request body for creating new media by direct upload

If you are uploading media directly from device or local storage, the inputs section contains the relevant upload details, and the structure would look like this:

{

"corsOrigin": "*",

"pushMediaSettings": {

"moderation": {

"type": "av"

},

"accessPolicy": "public"

}

} See steps for detecting moderation in existing media.

Categories for moderation

The NSFW filter analyzes content based on the type of media (audio or video) and flags it under specific categories:

For audio files: If the content is audio-only, the following categories are considered for moderation detection

- Harassment

- Harassment/Threatening

- Hate

- Hate/Threatening

- Illicit

- Illicit/Violent

- Sexual/Minors

- Self-Harm

- Self-Harm/Intent

- Self-Harm/Instructions

- Sexual Content

- Violence

- Violence/Graphic

For video files: If the content is video-only, the categories to be considered for moderation are -

- Self-Harm

- Self-Harm/Intent

- Self-Harm/Instructions

- Sexual Content

- Violence

- Violence/Graphic

Confidence score

Each category is assigned a confidence score between 0 and 1, where:

- A score close to 1 indicates a high confidence that the content belongs to the detected category, meaning the moderation detection is strong.

- A score close to 0 suggests low confidence, indicating that the content is less likely to fall under that category.

Accuracy in detection

NOTE

This beta version is more suited to videos shorter than 3 minutes for better accuracy.Accuracy in detection of NSFW content depends on the length of your videos. For videos shorter than 3 minutes, the accuracy is generally high due to the dense sampling of frames. However, as video lengths exceed 3 minutes, the current detection process becomes less precise, as fewer frames are analyzed per second.

That said, it's important to evaluate whether your specific use case truly requires NSFW moderation for long videos. Most platforms focusing on user-generated content (UGC) prioritize short-form videos, where moderation accuracy is optimal. For instance, popular platforms like TikTok and Instagram Reels operate within shorter video durations, making them ideal candidates for high-accuracy moderation.

Here’s a quick comparison of video durations across these platforms:

| Platform | Minimum Duration | Maximum Duration | Average Duration (Small Accounts) | Average Duration (Large Accounts) |

|---|---|---|---|---|

| TikTok | 3 seconds | 10 minutes | 40 seconds | 55 seconds |

| Instagram Reels | 15 seconds | 3 minutes | 45 seconds | 60 seconds |

As shown, the average video duration for both small and large accounts on TikTok and Instagram Reels is well below the 3-minute threshold. This aligns with the typical use case for most UGC platforms, where users engage with short, high-impact content.

While the idea of moderating long videos may seem appealing, it often doesn't align with the actual requirements of platforms leveraging NSFW detection. Instead, focusing on short-form videos ensures higher accuracy and efficient moderation, which are better suited for real-world applications.

NOTE

Current version of our video moderation feature is not a GRM standard yet. With Phase 2 rollouts, we're looking forward to achieving greater precision.

Detecting moderation in existing media

If you wish to analyze media that has already been uploaded, use the Enable video moderation API endpoint and follow these steps:

- Step 1: Retrieve the media ID of the existing media you want to analyze for moderation.

- Step 2: Create a JSON request and send a PATCH request to the

/on-demand/<mediaId>/moderationendpoint. Replace<mediaId>with the ID of the media you want to analyze for moderation.

Example request body:

{

"type": "av"

} Input parameters:

- Type: Specify whether the media is a video or audio or av (both audio and video).

Accessing moderation results

To retrieve the generated moderation of your media (video/audio), use the Get Media by ID endpoint.

Alternatively, you can access video.mediaAI.moderation.ready event, which triggers the moderation data including categories and confidence scores.

Example of the event data:

{

"type": "video.mediaAI.moderation.ready",

"object": {

"type": "mediaAI",

"id": "69f82b00-151c-45d4-942c-6eab719143b2"

},

"id": "449ffaa5-ba84-452a-b368-53da62d4641e",

"workspace": {

"id": "fd717af6-a383-4739-8d8c-b49aa732a8c0"

},

"moderationResult": [

{

"category": "Harassment",

"score": 0.87

},

{

"category": "Hate",

"score": 0.57

}

]

}In this event, the moderationResult field contains a list of detected categories, along with their respective confidence scores.

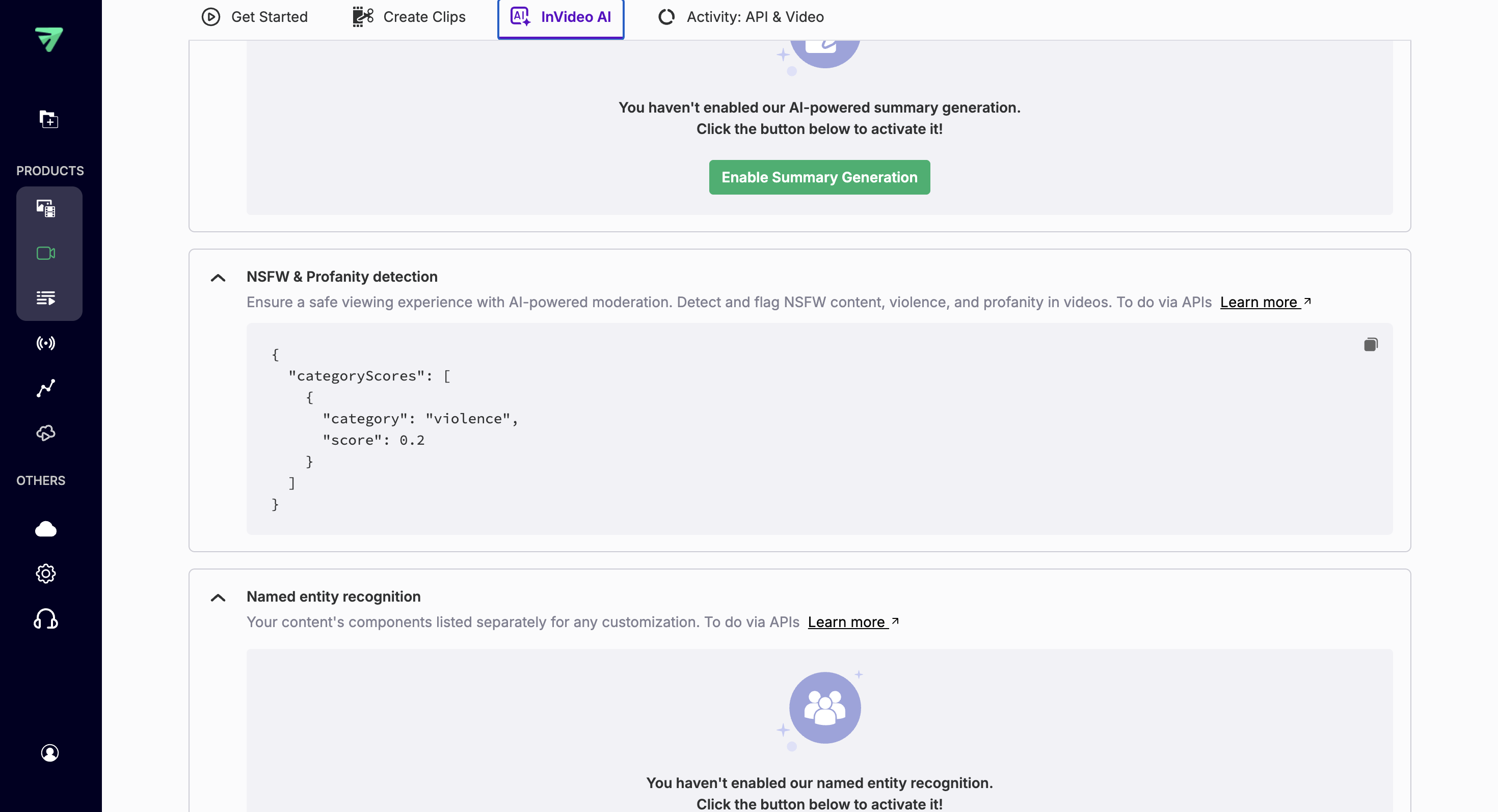

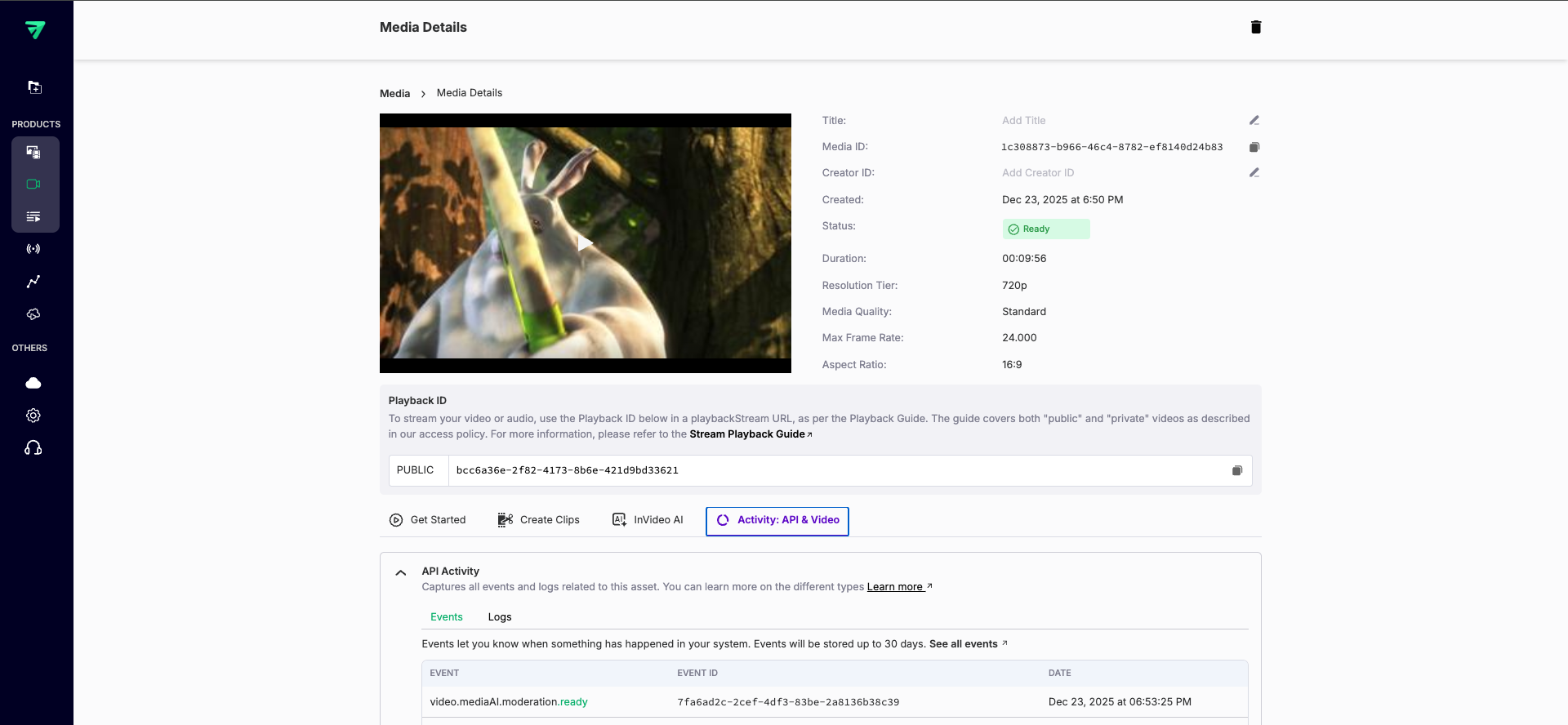

Using NSFW moderation from the FastPix dashboard

Follow these steps to run NSFW and profanity moderation from the FastPix dashboard:

-

Open the Media item

From the FastPix dashboard, go to Media → [your media item] and open the Media Details page. -

Open the InVideo AI tab

On the Media Details page, click the Moderation or AI / Content Moderation tab to view moderation controls and history. -

Enable NSFW & profanity checks

Click Enable NSFW & Profanity Detection. -

View results and events

When moderation completes, results appear in the InVideo AI tab (categories, confidence scores). FastPix emits thevideo.mediaAI.moderation.readyevent in the Activity: API & Video tab.

- Take action

- To automatically block playback, update the media

accessPolicyor playback rules when the event indicates a high-confidence detection. - For manual review, forward the event payload to your review queue or ticketing system.

- To automatically block playback, update the media

General considerations

- Ensure correct media type: Always specify whether the media is an audio or video file. This is critical for accurate moderation detection, as different categories apply to each type.

- Media ID for existing content: When using the

/on-demand/<mediaId>/moderationendpoint, ensure that themediaIdis correctly obtained for existing media to ensure accurate processing. - Optional parameters: The

accessPolicyandmaxResolutionparameters are optional but helpful for managing access permissions and media resolution for analysis.

Content classifications

Below is an overview of the categories detected by the moderation API, along with their descriptions and supported input types:

| Category | Description | Inputs |

|---|---|---|

| Harassment | Content promoting harassment toward any individual or group. | Audio only |

| Harassment/Threatening | Harassment combined with threats of violence or harm. | Audio only |

| Hate | Content expressing hate based on protected attributes (for example, race, gender). | Audio only |

| Hate/Threatening | Hate speech that includes violent threats. | Audio only |

| Illicit | Instructions or advice on committing illegal acts. | Audio only |

| Illicit/Violent | Illicit content with references to violence or weapons. | Audio only |

| Self-Harm | Content depicting or promoting acts of self-harm. | Audio, video |

| Self-Harm/Intent | Expressions of intent to engage in self-harm. | Audio, video |

| Self-Harm/Instructions | Instructions on committing self-harm acts. | Audio, video |

| Sexual | Explicit content intended to arouse or promote sexual services. | Audio, video |

| Sexual/Minors | Explicit content involving individuals under 18 years old. | Audio only |

| Violence | Depictions of physical harm or injury. | Audio, video |

| Violence/Graphic | Graphic depictions of physical harm or injury. | Audio, video |

How NSFW detection works

Spritesheets are a core feature in FastPix’s NSFW detection, providing a structured and efficient method to analyze video frames for harmful or inappropriate content. By capturing representative snapshots of a video, spritesheets help streamline the detection process.

We’re evolving about how we use spritesheets for NSFW detection to address varying content moderation needs. Here's an in-depth look at how we are working on it across different phases:

Phase 1: What we currently have (Beta)

In the first phase of spritesheet-based NSFW detection, a fixed number of thumbnails are generated based on the video duration. This method provides a balance between processing efficiency and accuracy:

For videos shorter than 15 minutes:

A total of 50 fixed thumbnails are generated. For example:

- A 6-minute video (360 seconds) produces a thumbnail every 7.2 seconds.

- A 10-minute video (600 seconds) produces a thumbnail every 12 seconds.

For videos longer than 15 minutes:

A total of 100 fixed thumbnails are generated. For example:

- A 1-hour video (3,600 seconds) produces a thumbnail every 36 seconds.

- A 2-hour video (7,200 seconds) produces a thumbnail every 72 seconds.

This approach ensures that key frames are captured without overwhelming the processing system. However, it may lead to reduced detection accuracy for longer videos, as fewer frames per second are analyzed.

Phase 2: Upcoming enhancements

Recognizing the limitations of Phase 1, our upcoming Phase 2 plans introduces significant enhancements to spritesheet generation to improve accuracy and granularity in detecting NSFW content.

Increased thumbnail granularity

- Thumbnails are generated at a rate of one per second, regardless of video duration.

- This approach ensures that every second of the video is analyzed, capturing nuanced frames that may contain harmful content.

- Example:

- A 6-minute video has 360 thumbnails (one for each second).

- A 2-hour video has 7,200 thumbnails, providing far greater coverage than the fixed 100 in Phase 1.

Enhanced audio analysis

- For audio content, timestamps are captured whenever explicit or inappropriate language is detected.

- These timestamps can be used for precise interventions, such as censoring or beeping specific words.

Improved processing algorithms

- Advanced algorithms are integrated to analyze higher volumes of data while maintaining processing speed.

- This allows the system to scale effectively for longer and more complex videos.

Updated about 2 months ago